Welcome Back Dear Readers!

That last Let’s Program went pretty well. Built a nifty little chatbot and got some good reader feedback. So let’s give this a second go. Our topic this time? Swarm Intelligences!

What Is A Swarm Intelligence?

A swarm intelligence is a large-scale alien brain capable of controlling billions and billions of deadly warriors at one time. They grow stronger and smarter by absorbing the DNA and memories of other species. They are ruthless, efficient and unstoppable.

I’m Pretty Sure That Isn’t Right

Oh, sorry. Looks like my old Starcraft manual got mixed in with my research material. My bad. Good game though. I really ought to get around to buying the sequel. Rumor has it this one has 3D graphics and everything!

Anyways, let’s start over!

What Is A Swarm Intelligence?

The phrase “Swarm Intelligence” refers to a bunch of different problem solving algorithms that were all inspired by watching how real life animals make group decisions. Things like flocks of birds, schools of fish, colonies of ants and swarms of bees have all inspired their own types of swarm intelligence. While each swarm intelligence algorithm is different most are based around the idea of creating a large number of semi-independent problem solving programs that work together and share information. Why have just one AI working on your problem when you can have 100?

Of course, the fact that there are so many different kinds of swarm intelligences means we can’t really just program a generic “Swarm Intelligence”. We’re going to have to choose a specific kind. After a little research I decided that this Let’s Program is going to focus on a type of AI called a “Particle Swarm Optimizer”.

So for the rest of this series when I say “Swarm Intelligence” know that I’m probably referring to a “Particle Swarm Optimizer”. But always remember, there are lots of different kinds of swarm intelligence and they all work differently.

What Is A Particle Swarm Optimizer?

A “Particle Swarm Optimizer” is an optimizer that uses a particle swarm to do it’s optimizing.

That last sentence was very very accurate but it honestly wasn’t very useful. Let’s break it down a little further. First off let’s cover what an “Optimizer” is.

When we talk about “solving” a problem we usually think of finding one right answer. But not all problems have one right answer. Lots of real world problems actually have an infinite number of solutions that are all “right”. But even if they are all “right” some of those solutions will be better than others.

For example, imagine you run an oil refinery. By adjusting the refinery’s temperature and pressure you discover you can increase or decrease the amount of oil you refine per hour. Any temperature and pressure setting that refines enough oil to pay your bills is a “right” answer, but there are “better” settings that will refine more oil per hour and earn you enough cash to not just pay the bills but also give your employees a big bonus and buy yourself a Ferrari.

Obviously we aren’t going to settle for just any old “right” answer when there is a “better” answer and a Ferrari on the line. This process of trying to find better solutions even after finding one “right” answer is known as “Optimization” and there are lots of different ways to do it.

The “Particle Swarm Optimizer” approach to optimization is to basically make a whole bunch of random guesses at possible best solutions. You then look at which guess performed the best and use that information to adjust all of your guesses. You then see what the best solution from your adjusted guesses was and do the whole thing over again. After several thousand or million random guesses (depending on how much time you can spare) you should eventually have settled on one best guess.

But why is this called a “Particle Swarm Optimizer” and not an “Intelligent Mass Guessing Optimizer”? (Besides the obvious fact that the first name is much much cooler)

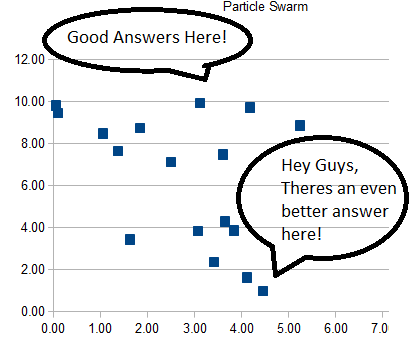

Well, let’s go back to our oil refinery example where we can adjust the temperature and pressure. Every guess we make will just be a temperate mixed with a pressure. Now let’s make a graph where the X axis is temperature and the Y axis is pressure. Now let’s mark down every guess we’ve made so far. You should end up with a big cloud of random dots.

Now every time we update our guess we also update our graph. The cloud of random dots will start to move around and eventually drift towards good answers. If you squint a little it will look like the dots are working together to explore new possible solutions. In fact, it will look like a big swarm of particles drifting through space and gathering around good solutions.

Hence “Particle Swarm Optimizer”.

Particle Swarm Weaknesses

Now that you know how particle swarm optimizers more or less work you can probably start to see a few potential problems.

First off, particle swarms only work in situations where you can make random guesses and get an answer back immediately. Making millions of guesses isn’t a good strategy if you have to wait several hours or days to find out which guesses did well and which flopped. Usually this means that you can only use a particle swarm to optimize problems that you understand well enough to program into your computer.

For instance, if there is a complex equation explaining how temperature and pressure influence your hypothetical oil refinery you could use a particle swarm to optimize that equation. On the other hand if you’re really not sure how your oil refinery works* particle swarm optimization would be a really bad idea. Every time your program made a new guess you would have to physically adjust the refinery, take some physical measurements and then type them into your computer. Yuck.

So particle swarms only work with complete equations or with automated testing equipment that can perform experiments and report results really really fast.

Also, because particle swarms operate by making intelligent guesses there is no actual guarantee that they will find the “best” solution available. There is always a risk that the swarm will drift past the “best” answer and instead get attached to a “good” answer. This is actually a common problem with lots of AI techniques though, not just particle swarms. And as you’ll see in a few paragraphs this is actually less of a problem for particle swarms than it is for many other algorithms.

Why Use A Particle Swarm Optimizer?

So why would we want to use an AI that requires a full mathematical description of the problem we want to solve, especially if it can’t even guarantee a truly optimal solution? If we’ve already managed to reduce our problem to an equation can’t we just use calculus to find the true best value?

Excellent question, clever reader. If you are just trying to optimize a two variable equation you probably are better off using calculus and just outright solving the thing. Not every problem needs an AI.

But lots of problems that are too complicated to solve with calculus, or at least too complicated to solve quickly. Using calculus to solve a seventeen variable equation is really really hard. If some of the variables are dependent on each other it gets even harder. We’re talking about the sort of problem that would be worth a PhD.

But the particle swarm’s guessing approach works just as well in seventeen dimensions** as it does in two. It doesn’t really care how hard it is to derive or integrate an equation because it never has to do either of those things. It just guesses, checks and adjusts.

So you could spend ten years trying to solve an impossible calculus problem… or you could just plug it into a swarm intelligence, wait an hour or two and get an answer that’s probably close enough to the true optimum for all practical purposes.

But what if you are really worried about missing out on the true optimum? Well, particle swarms are less likely to miss true optimums than many other types of AI. This is because the particles start out spread all over search space. This increases the chance that at least one particle will notice really good data and let the rest of the swarm know that they are looking in the wrong place. A less swarmy algorithm that starts looking in the wrong place is more likely to just get stuck there.

Finally, particle swarms are useful because of their ability to handle “rough” data. Imagine a step function where you use one equation when temperature is below 100 degrees and another equation when it is above 100 degrees. Lots of problem solving algorithms will throw a fit when they hit that gap between equations. But since the particle swarm is just guessing and checking as it flies through problem-space it doesn’t really care that the data does really weird things around 100 degrees. It just notes whether the end result is better or worse than before and keeps going.

Conclusion

Now you know what a swarm intelligence is and have been introduced to particle swarm optimization. Congratulations! You are now part of the less than 1% minority of the population that has ever bothered to study AI in any depth.

But why settle for that? Let’s take it to the next level by actually programming one, putting ourselves in the 1% of the 1% of the population that enjoys amateur AI programming. I have no idea if this is a particularly useful super-minority to be part of… but why let that stop us?

* Why did you buy an oil refinery if you didn’t understand how it worked? Invest more responsibly in the future!

** Have fun imagining a seventeen dimensional swarm of particles flying through seventeen dimensional space.